Blog archive by category

All posts in the framework category

AFIM workgroup meeting

Posted July 31, 2006 by Pascal Baltazar

In July a French development team related to a workgroup commissioned by the AFIM (French Association of Musical Computing) had a meeting in Paris. Below is a resume written by Pascal and posted to the Jamoma-devel mailing list:

First, this group is related to a workgroup commissioned by the AFIM (French Association of Musical Computing). For those reading french, there’s a report of our activity here:

http://didascalie.net/tiki-index.php?page=afim-pagepublique

(We’ll try to translate it some day soon…)

For those not reading French, more or less, we aim at :

- Addressing the practices, tools and professional roles around the several ways of using the sound in the performing arts.

- Establishing a state-of-the-art of the existing and available tools for audio composition and interpretation in the performing arts, and trace a way for future development.

That’s on this way that we discovered Jamoma, and also Integra.

Our goals are not so distant of those of the Integra group, even if we are in a different field. That’s why I attended one of their meetings in Krakow, to get more informed about their activity. We also have a person in common in both groups, who is Thierry Codhuis, CEO of La Kitchen, Paris. Of course, we look forward to setting up which could be our modes of collaboration with Integra in the future. I’m convinced we have a lot of complementariness as well as converging goals, and that we should succeed finding a way to collaborate… I’ll come back to Lamberto, of Integra in the autumn, when we have a clearer picture of where we’re going….

So, as a first phase of our future development, we’ve set up as a kind of parallel group a team that we’ve called EVE (for Experimental Virtual Environment, or whatever you wish…) This development of a common framework for building audio environments is of course based on Jamoma, and is beginning right now. We plan to have an operational draft at the end of the summer. We’ll use it for several projects, and will distribute it to a small circle of users, in order to get some feedback. The aim, for now, is not to widespread it, nor to advertise it, as we consider it as a beta version, and as we will be redacting a documentation along the way….

The developers in the team are:

- Mathieu Chamagne, Nicolas Carrière and Pascal Baltazar, composers.

- Olivier Pfeiffer and François Weber, sound managers for theatre.

- Jean-Michel Couturier, interactive systems designer,

http://www.blueyeti.fr/en/ - Jean-Louis Larcebeau and Guy Levesque, sound managers, Tom Mays,

composer, Anne Sedes, academic and composer, Georges Gagneré, stage

director, who will mainly observe the development, and give some

feedback.

What we’ll try to achieve this summer consists of:

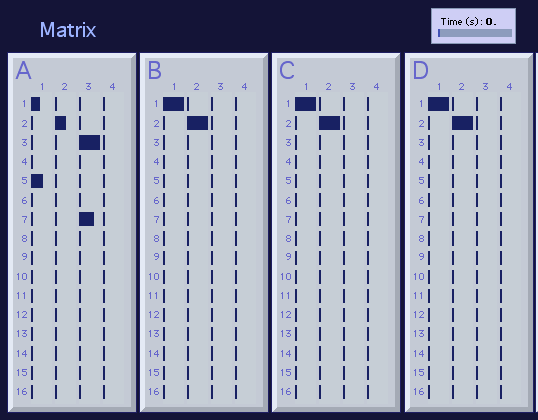

- A wrapping system, to build automatically the setup with a menu/jit.cellblock system. We will use the “group” system designed by Mathieu, which is a way to hide/show bpatchers by groups, thus making a kind of Mozilla-like Tab system. There will be the possibility to have either a pattrstorage or a cuelist on top of everything, to manage cues and states. We’ll also allow the possibility to have preset sub-spaces, for example in auxilliary windows (for example, for audio-matricing : to have matricing presets changing less often than other cues, but although commanded by the main preset management system… There will also be a vu-meter space, to monitor inputs and outputs, and a buffer management system, to dynamically build a bunch of buffers, connected to all the RAM modules. Here comes a little sketch of it, as a fullscreen patch:

- An audio-matricing system, with a multislider interface, to allow progressive sends, based on François’s SeqCon Matrix : http://hapax84.free.fr/telecharg.html or

http://www.macmusic.org/news/view.php/lang/en/id/3620/ - * A kind of host module, to gather several modules (for example a generator and two filters) and connect them in line mode, and then send them to the matrix. This is to achieve a branch/trunk system and avoid having a thousand inputs to the matrix, when some modules will always be connected in the same way. There will also be a pattrstorage on top of it, and one or several audio send, to route the signal to the matrix and/or the spatialisation module. A little enveloppe follower will be placed at the end of the chain and will return the volume as a flow of data to be mapped anywhere…

- A spatialisation module, to gather Trond’s fantastic ambisonics modules, manage trajectories, etc…. Mathieu was thinking about implementing also a dbap (distance-based) module that could become part of the Jamoma distro….

- Several “generative” modules : Audio Input, RAM player (groove~ based), granular synthesis (buffer~-based also), Direct-to-disks (1 to 8 tracks), simple oscillator/synth….

- Several “effects” modules : VST host, Envelope generator (based on multislider), basic reverb, variable-delay, pitch-shift + of course using the modules of the Jamoma distro

- Several mapping modules :

- “simple” one-to-one mappers : (based on Z’s ones, already discussed here) : continuous, triggers, thresholds detecters (up and down-front → triggers), timelines (based on multisliders). I’m thinking to implement them, on a suggestion of Alexander, as different jmod modules (one for each type of mapper), with an argument determining the number of mapper instances (i.e. bpatchers) that would be created inside of one module.

- more sophisticated mapping modules, as the one Mathieu developed with the pmpd objects (Physical models), or a 2d mapping module (based

on something like tap.jit.ali or something…)

- Some input modules :

- A wacom module, that will be developped by Jean-Michel Couturier, who is the developper of the wacom Max external for Mac.

- several sensor inputs, each one based on specific hardware (as La Kitchen’s kroonde, WiSe Box… etc…)

- a pre-mapping module to scale the sensors to standard values (e.g. 0.-1.

scale), and some other operations

These two last ones will be developed by Nicolas, so I suggest that Nicolas and Jean-Michel get in contact with Alexander, to get informed of the standardization work Alexander already did in this domain. I will send an e-mail to all three, in order that they can begin discussing these issues.

Some of these modules could also become part of the Jamoma distro, as Mathieu’s dpab module, François’ VST module, Jean-Michel’s wacom module, my simple mappers…

Here are some more environments for MaxMSP modules more or less related to the philospphy of Jamoma. I have not had the time to check out of all them yet to see how they compare to Jamoma:

Cooper is a flexible environment which can be controlled from meta-parameter-levels without loosing direct control of single parameters or giving responsibility to the computer. The idea was to create a flexible environment which can be controlled from meta-parameter-levels without loosing direct control of single parameters or giving responsibility to the computer. The audio processing is encapsulated into modules, which get connetcted through a matrix.

MaWe has been designed as a compositional and performance tool for audio and video purposes.

mirage is a software written in a Max environment, initially conceived for sounds and recently equiped with powerful tools to treat images (Jitter, SoftVNS, etc.).

lloopp is a software written in max/msp, designed for live-improvising on a macintosh.

Framework

Posted February 26, 2006 by Trond Lossius

There seems to be several similar initiatives to Jamoma happening at the moment. The UBC Max/MSP/Jitter Toolbox blogged here a few days ago is one of them. Yesterday I discovered another: Framework by Leafcutter John. Framework is aimed at effect processing of stereo audio signals. This is how he describes it:

I wanted to do a project where the many people could contribute to a big Max patch for treating audio. So I developed Framework, a free to use / modify modular audio patch made in Max/MSP.

The framework part of the patch comprises 9 bpatcher objects connected in series. The user loads different kind of audio treatment modules into the bpatchers.

You play a stereo file into the framework using splay~. Or you can use a mic input. If you want to do something different to this then please hack the patch!

He encourages others to make new modules and e-mail them to him for inclusion in future releases.

UBC Max/MSP/Jitter Toolbox

Posted February 23, 2006 by Trond Lossius

First posted 02/23/2006 10:31:21.

UBC Max/MSP/Jitter Toolbox is a modular approach to a standardised way of developing modules in MaxMSP somewhat resembling Jamoma. The UBC Max/MSP/Jitter Toolbox is a collection of modules for creating and processing audio in Max/MSP and manipulating video and 3D graphics using Jitter. Alexander has briefly tested it, and have posted some screenshots from one of the example patches.

UBC Max/MSP/Jitter Toolbox is developed by among others Keith Hamel, Bob Pritchard, and Nancy Nisbet.

By way of Alexander’s blog.